If you write software in 2025, you’re probably asking a simple question with a complicated answer: is OpenAI’s GPT-5 or Anthropic’s Claude Sonnet-4 the better coding partner? The short version: GPT-5 is currently edging ahead on raw, “fix-this-issue” benchmarks, while Sonnet-4 (Claude) still draws praise for calm, coherent edits across larger codebases. Let’s unpack the data and the vibes.

Benchmarks to watch (not marketing slides):

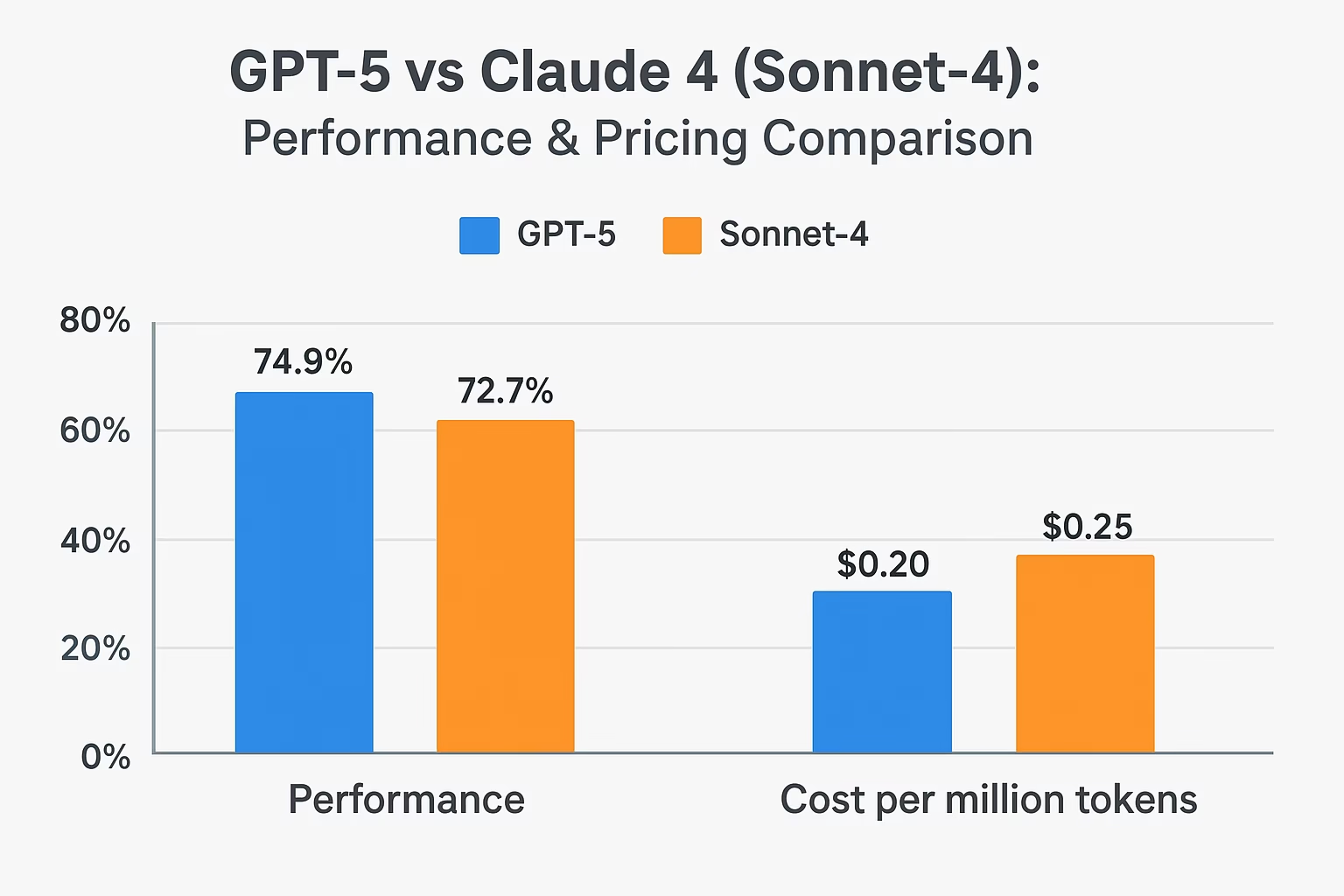

OpenAI reports that GPT-5 is setting new marks on coding tasks, such as SWE-bench Verified and Aider’s Polyglot evals (e.g., 74.9% and 88%, respectively, according to OpenAI’s developer notes). OpenAI

Independent leaderboards tell a subtler story: recent SWE-bench results list GPT-5 and Claude Sonnet-4 essentially neck-and-neck at the top, with both far ahead of older models. SWE-bench

For historical context, Anthropic’s earlier Claude 3.5 Sonnet achieved 49% on the SWE-bench Verified—already a notable leap at the time, demonstrating Anthropic’s steady progress in agentic coding. Anthropic Latent Space

How devs say it feels:

Early industry coverage describes GPT-5 as powerful but somewhat inconsistent, exhibiting strong planning and technical reasoning, yet sometimes producing verbose code or redundant edits. WIRED

Broader tech press echoed a “good but not iPhone-moment” reception—incremental speed/cost gains and fewer hallucinations, with notable strengths in coding. The Verge

On the other hand, some practitioners prefer GPT-5’s feature set and speed in everyday work. Tom’s Guide

Developer threads still praise Claude/Sonnet for document-aware refactors and staying on-task inside bigger projects—though opinions vary, and cost can sway choices. Reddit+1

When GPT-5 shines

- Benchmark-driven bug fixing: If you’re triaging GitHub issues end-to-end, GPT-5 often lands a working patch quickly. OpenAISWE-bench

- Tool-heavy workflows: GPT-5’s ecosystem and agent patterns are maturing fast for tests, builds, and code search. (OpenAI highlights this “true coding collaborator” framing.) OpenAI

When Sonnet-4 (Claude) shines

- Large-scope edits with less thrash: Many devs report Claude/Sonnet sticks closer to requirements and touches fewer unrelated files, which matters in monorepos. Reddit

- Long-form reasoning continuity: Anthropic’s lineage is known for gentle, readable diffs and strong tool use on complex tasks. Latent Space

Tiny demo: test-first bug fix (Python)

# failing test

def test_slugify_handles_unicode():

assert slugify("Crème Brûlée!") == "creme-brulee"

# minimal implementation GPT-style (fast patch)

import re, unicodedata

def slugify(s: str) -> str:

s = unicodedata.normalize("NFKD", s).encode("ascii", "ignore").decode()

s = re.sub(r"[^a-zA-Z0-9]+", "-", s).strip("-").lower()

return s

For many teams, GPT-5 will propose a compact fix like this quickly. Sonnet-4 may add a short docstring, edge-case notes, or suggest tests for emoji/RTL input—nice touches when quality gates matter. (Your results will vary—run tests, review diffs, and keep humans in the loop.)

Choosing for your team (inclusive, practical guidance)

- Solo devs & prototypes: Start with GPT-5 for speed and cost; switch if you see churn in diffs. OpenAIWIRED

- Large codebases & strict PR reviews: Try Sonnet-4 for steadier scoped edits; benchmark on your repos. Reddit

- Budget-sensitive orgs: Community reports note GPT-5 (and smaller GPT-5 variants) can be very cost-effective for near-top performance. Reddit

- Evidence over hype: Check SWE-bench and run a pilot on 10–20 real issues before standardizing. SWE-bench

Bottom line: Both models are excellent. If you value raw benchmark punch and integrations, GPT-5 is a great default. If you want calmer, requirements-faithful edits on sprawling repos, Sonnet-4 is a strong pick. The best model is the one that merges your PRs greenest—so test on your code, include accessibility and security checks, and invite the whole team into the evaluation.