1. Introduction

As computational demands continue to grow in fields such as artificial intelligence, data analysis, gaming, and scientific research, choosing the right processing unit has become increasingly important. Central Processing Units (CPUs), Graphics Processing Units (GPUs), and Tensor Processing Units (TPUs) are three key players in the hardware ecosystem. Each is designed to tackle specific tasks efficiently.

Understanding the nuanced differences between these architectures is crucial for optimizing performance and cost-efficiency in both consumer and enterprise applications.

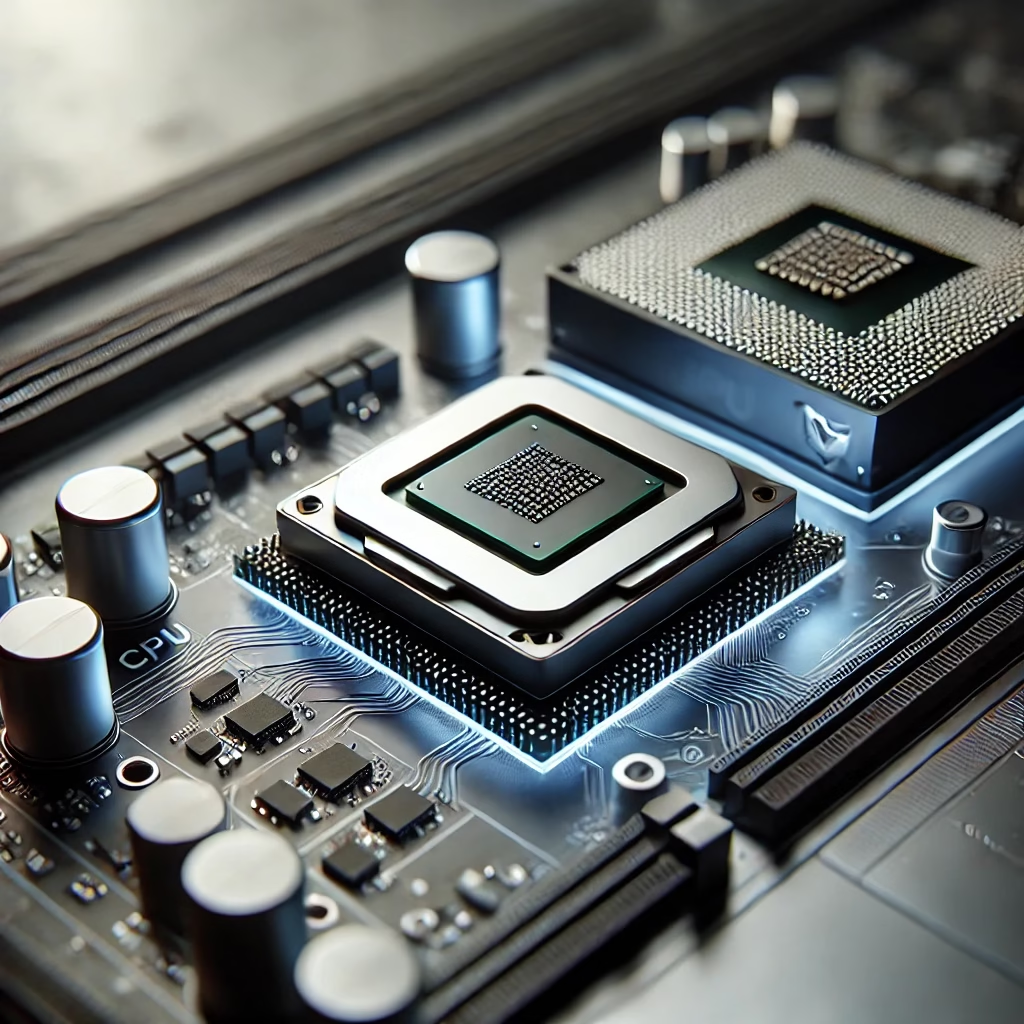

2. What is a CPU?

The CPU (Central Processing Unit) is the general-purpose processor in most computing devices. It is designed for sequential processing and is capable of handling a wide variety of tasks efficiently.

Key Characteristics:

- Core Count: Ranges from 2 to 64 cores in consumer and server environments.

- Clock Speed: Typically between 2 GHz to 5 GHz.

- Cache: Large cache sizes (L1, L2, L3) to enhance single-threaded performance.

Strengths:

- Excellent at single-threaded tasks and complex logical operations.

- Handles operating systems, applications, and general-purpose computing tasks.

Limitations:

- Slower when performing massively parallel operations.

3. What is a GPU?

The GPU (Graphics Processing Unit) was initially designed for rendering graphics but has become a powerhouse in parallel computing.

Key Characteristics:

- Thousands of Cores: Modern GPUs can have up to 10,000 cores optimized for parallelism.

- Memory Bandwidth: High bandwidth memory (e.g., GDDR6, HBM2) to support data-intensive operations.

- Clock Speed: Generally lower than CPUs, around 1-2 GHz.

Strengths:

- Excels at parallel processing and matrix operations.

- Ideal for machine learning, deep learning, and large-scale data processing.

Limitations:

- Not well-suited for sequential tasks and general-purpose computations.

4. What is a TPU?

The TPU (Tensor Processing Unit) is a specialized processor designed by Google specifically for accelerating machine learning workloads.

Key Characteristics:

- Matrix Multiplication Units: Optimized for tensor computations.

- Low Precision Arithmetic: Focused on 8-bit and 16-bit operations for speed and efficiency.

- Cloud-based: Predominantly available through Google Cloud Platform.

Strengths:

- Designed exclusively for neural network training and inference.

- Exceptional performance in deep learning tasks.

Limitations:

- Limited to tensor-based computations and machine learning workloads.

- Restricted access compared to CPUs and GPUs.

5. Key Differences Between CPU, GPU, and TPU

| Feature | CPU | GPU | TPU |

|---|---|---|---|

| Purpose | General-purpose computing | Parallel computing, graphics | Deep learning optimization |

| Core Count | 2-64 | Thousands | Matrix Units |

| Clock Speed | 2-5 GHz | 1-2 GHz | Optimized for throughput |

| Memory Bandwidth | Moderate | High | Very High |

| Power Consumption | Moderate | High | Optimized for efficiency |

| Best For | Sequential tasks | Parallel tasks | Tensor operations |

6. Performance Analysis with Example Calculations

Example 1: Matrix Multiplication (1000 x 1000 Matrix)

Using a CPU:

Assuming a CPU processes one multiplication per cycle and operates at 3 GHz:

- Operations: 1,000,000,000 multiplications

- Time per operation: 1 cycle

- Total Time = 1,000,000,000 cycles / 3,000,000,000 cycles per second = 0.33 seconds

Using a GPU:

Assuming a GPU has 10,000 cores, each performing one multiplication per cycle at 1.5 GHz:

- Operations: 1,000,000,000 multiplications

- Time per operation (parallel): 1,000,000,000 / 10,000 cores = 100,000 operations per core

- Total Time = 100,000 cycles / 1,500,000,000 cycles per second = 0.0000667 seconds

Using a TPU:

Assuming the TPU is optimized to perform 128 multiplications per clock cycle at 700 MHz:

- Operations: 1,000,000,000 multiplications

- Effective throughput: 128 operations per cycle

- Total Cycles = 1,000,000,000 / 128 = 7,812,500 cycles

- Total Time = 7,812,500 / 700,000,000 = 0.0112 seconds

Clearly, the TPU outperforms both the CPU and GPU for tensor-heavy computations.

7. Real-world Applications

| Application | CPU Usage | GPU Usage | TPU Usage |

| Web Browsing | High | None | None |

| Gaming | Moderate | High | None |

| Video Editing | High | High | None |

| Machine Learning Training | Low | Very High | Extremely High |

| Neural Network Inference | Low | High | Extremely High |

For instance, in machine learning frameworks like TensorFlow (link), utilizing a TPU can drastically reduce training time compared to CPU and GPU setups.

8. Conclusion

Each processing unit excels in specific domains:

- CPUs are indispensable for general-purpose and sequential tasks.

- GPUs dominate parallel computing and data-heavy operations like graphics and machine learning.

- TPUs redefine efficiency and speed in deep learning applications.

Choosing between a CPU, GPU, or TPU depends on your computational needs. For instance, if you’re developing deep learning models using PyTorch (link), a GPU or TPU is often the optimal choice, while CPUs remain essential for everyday computing and system management.