Introduction 🧠💬🚀

If you’ve been hearing a lot about AI and chatbots like ChatGPT but aren’t quite sure how they work, you’ve come to the right place. The secret behind these impressive tools is something called a Transformer. This is a special kind of model that helps computers understand and generate human-like text.

Understanding transformers and large language models (LLMs) is becoming increasingly important, as they are transforming industries from customer support to content creation and beyond. From improving search engines to creating creative content like poetry, LLMs powered by Transformers are shaping the digital future. These models are also becoming essential in fields like healthcare, finance, and education, making it crucial to understand how they function. This article will walk you through the basics of LLM Transformers—what they are, how they work, and why they are so powerful. Even if you’re new to AI, don’t worry. We’ll break it all down in simple, easy-to-understand terms. 🤖📚✨

Table of Contents

What is a Transformer? 🛠️📝💡

A Transformer is a type of computer program designed to work with language. It helps computers understand and generate text that sounds natural, like something a person would write.

Before Transformers, machines struggled to grasp context in longer texts. Traditional models like RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory models) processed sentences word by word. While effective for short inputs, they often forgot important context in longer sentences. This made them unreliable for complex conversations or long documents.

In 2017, a group of researchers introduced Transformers in a groundbreaking paper called “Attention Is All You Need“. The Transformer model drastically improved upon its predecessors by introducing a mechanism called self-attention.

What makes Transformers special is that they can look at all the words in a sentence at the same time, instead of one by one. This parallel processing helps the computer understand the meaning of the text much better and faster. It also allows Transformers to scale up and handle extremely large datasets, enabling the development of models like GPT-4 and BERT. 🧑💻📖⚡

How Do Transformers Work? 🔍🔠⚙️

Let’s say you’re reading a simple sentence like:

“The cat sat on the mat.”

To understand this, you need to know that “cat” is the subject, and it’s performing the action of sitting on a mat. Transformers help computers figure this out.

They do this by:

- Looking at every word in the sentence simultaneously.

- Assigning different importance to each word based on the context (for example, “cat” is more important than “the” here).

This process is called self-attention, and it is the key to how Transformers work. Because Transformers can examine the entire input at once, they can better understand context and relationships between words—even over long paragraphs.

The self-attention mechanism works by creating scores between all the words in a sequence. These scores represent how much attention each word should pay to other words. For instance, in the phrase “Alice gave Bob an apple,” the word “gave” pays attention to “Alice” and “Bob” more than it does to “an” or “apple.”

Another advantage is that Transformers are highly parallelizable. While older models had to read a sentence word by word, Transformers read everything simultaneously, making them faster and more efficient for large-scale processing. 🧑💻🔄🚀

Visualization Example

Imagine you are reading this sentence:

“The quick brown fox jumps over the lazy dog.”

A self-attention visualization might look like this:

The -> quick (0.8), brown (0.5), fox (0.6)

quick -> brown (0.9), jumps (0.7)

brown -> fox (0.8), dog (0.4)

fox -> jumps (0.9), lazy (0.3)

jumps -> over (0.8), dog (0.6)Each word is attending to other words with different strengths (represented by the numbers). This helps the model understand the structure and meaning of the sentence.

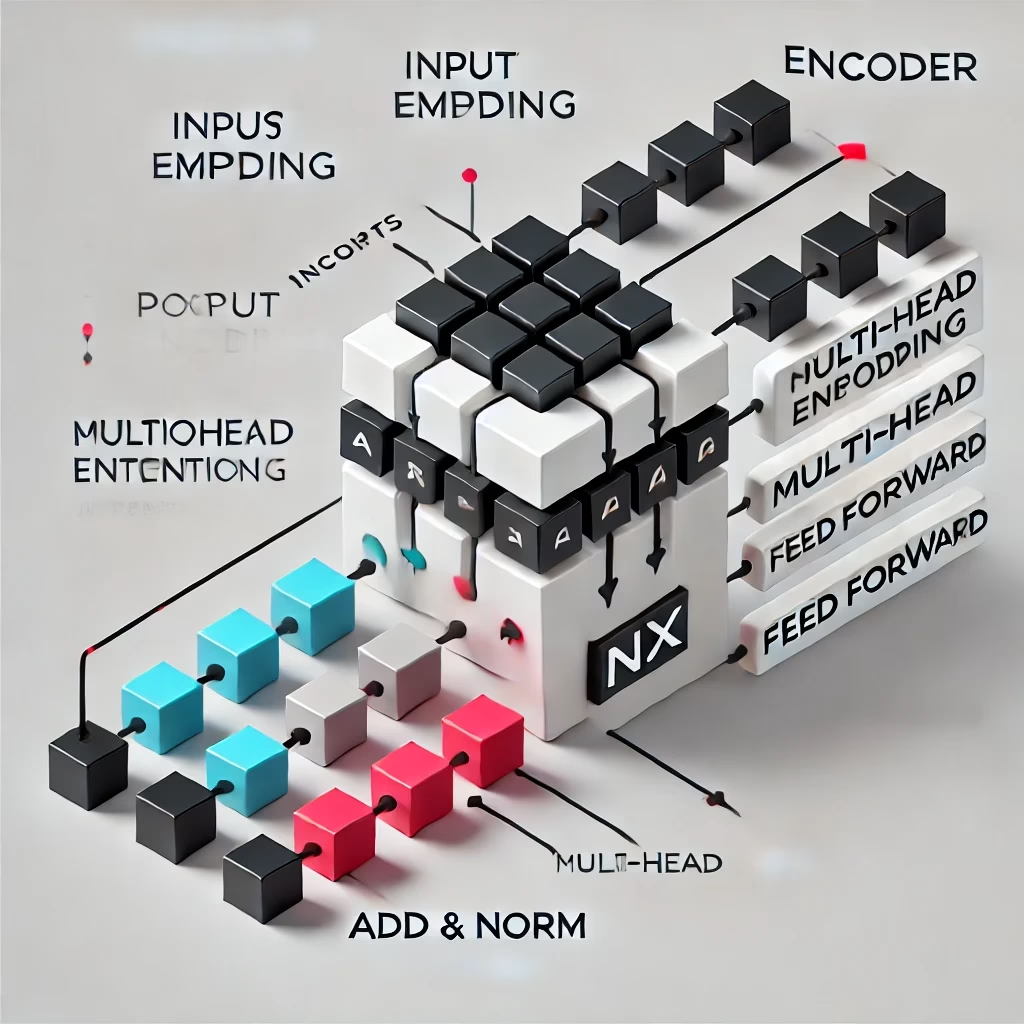

Key Components of Transformer Architecture 🏗️🔑📊

Here are the important parts of a Transformer explained in simple terms:

1. Self-Attention 🧠✨🔍

Self-attention is the core mechanism of the Transformer. It allows the model to focus on different parts of a sequence when processing a word. For example, when reading the sentence, “The cat sat on the mat,” the model can understand that “cat” is closely related to “sat” and “mat,” but “the” is less important. Self-attention assigns different weights to each word based on how important it is to understanding the current word. This enables the model to capture dependencies even if words are far apart in the text.

2. Multi-Head Self-Attention 👀🔄🔬

Instead of applying self-attention once, multi-head attention splits the input into multiple parts and applies self-attention independently to each. Each “head” learns different aspects of the sentence—one might focus on grammar, another on subject-object relationships, and another on word meanings. The results are combined to give a richer understanding of the input.

3. Positional Encoding 📍🔢🧮

Transformers process words in parallel, meaning they don’t automatically know the order of the words. Positional encoding solves this by adding a unique position value to each word. This way, the model can understand that “The cat sat on the mat” is different from “Mat the sat on cat the.”

4. Feedforward Neural Networks (FFN) 🖥️🔄📈

After self-attention, the output is passed through a feedforward neural network. This helps refine and transform the information further. It applies additional layers of learning to ensure the model extracts deeper patterns from the attention outputs.

5. Layer Normalization & Residual Connections 🔄✅🧑💻

These are techniques to stabilize the training process:

- Layer Normalization: Ensures that the values passing through the network are scaled and normalized, preventing values from becoming too large or too small.

- Residual Connections: Allow information to skip certain layers and flow through the network more smoothly. This helps the model train faster and reduces the risk of information getting “stuck” or vanishing during training.

Together, these components make Transformers capable of understanding complex language patterns and handling long sequences efficiently.

Transformers in Large Language Models (LLMs) 🧠💻🌍

Transformers are the foundation of LLMs—models trained on vast amounts of text to understand and generate human-like language. Without Transformers, the large-scale models like ChatGPT and Google Gemini wouldn’t be possible.

| Type | Description | Examples |

|---|---|---|

| Encoder-only | Good at understanding text. | BERT, RoBERTa |

| Decoder-only | Good at generating text. | GPT, LLaMA, Gemini |

| Encoder-Decoder | Good at transforming text. | T5, BART |

Simple Python Example

from transformers import pipeline

generator = pipeline('text-generation', model='gpt2')

result = generator("Once upon a time", max_length=50, num_return_sequences=1)

print(result[0]['generated_text'])Popular LLMs Based on Transformers 🌐📚🚀

| Model | Type | What It’s Good For |

| GPT-4 | Decoder-only | Chatbots, writing articles, coding |

| BERT | Encoder-only | Answering questions, finding information in text |

| T5 | Encoder-Decoder | Translating languages, summarizing articles |

| LLaMA | Decoder-only | Research, open-source AI tools |

| Gemini | Decoder-only | Working with text, images, and video together |

Conclusion 🎓🚀📖

Transformers have revolutionized AI. They power the tools we rely on daily, from search engines to chatbots. As LLMs continue to grow, understanding Transformers will become increasingly valuable across industries. The future of AI is being written—quite literally—by Transformers. 🌍📈✨

If you’re curious to try Transformers, check out the Hugging Face Transformers library