In recent years, local AI models have gained traction, allowing users to run large language models (LLMs) on their personal devices. Ollama has been a game-changer for self-hosting LLMs, enabling efficient, fast, and private AI on a MacBook Pro M1/M2, Windows, or Linux machine. But what about image generation?

While Ollama itself is primarily focused on text-based models, it’s possible to integrate AI-driven image generationinto a local or API-based workflow. This post explores various ways to generate images using Ollama-like setups, including Stable Diffusion, ComfyUI, LLaVA, and cloud-based APIs like DALL·E. We’ll take a deeper dive into each of these approaches, discussing how they work, their benefits, setup processes, and real-world applications.

Why Ollama Doesn’t Directly Support Image Generation

Unlike models like Stable Diffusion, which generate images, Ollama is optimized for LLMs that process and generate text. The Ollama CLI currently supports models like Mistral, Phi-2, LLaMA, and Code Llama, which focus on language-based tasks.

However, that doesn’t mean you can’t create a workflow where text and image generation coexist. With the right approach, you can leverage Ollama for text-based tasks while seamlessly integrating it with image-generation tools. Let’s explore these possibilities.

1. Running Stable Diffusion Locally for Image Generation

For those who want full control over AI-generated images, running Stable Diffusion locally is the best option. It works well on Apple M1/M2 Macs, Windows, and Linux machines, making it a great choice for developers, artists, and AI enthusiasts.

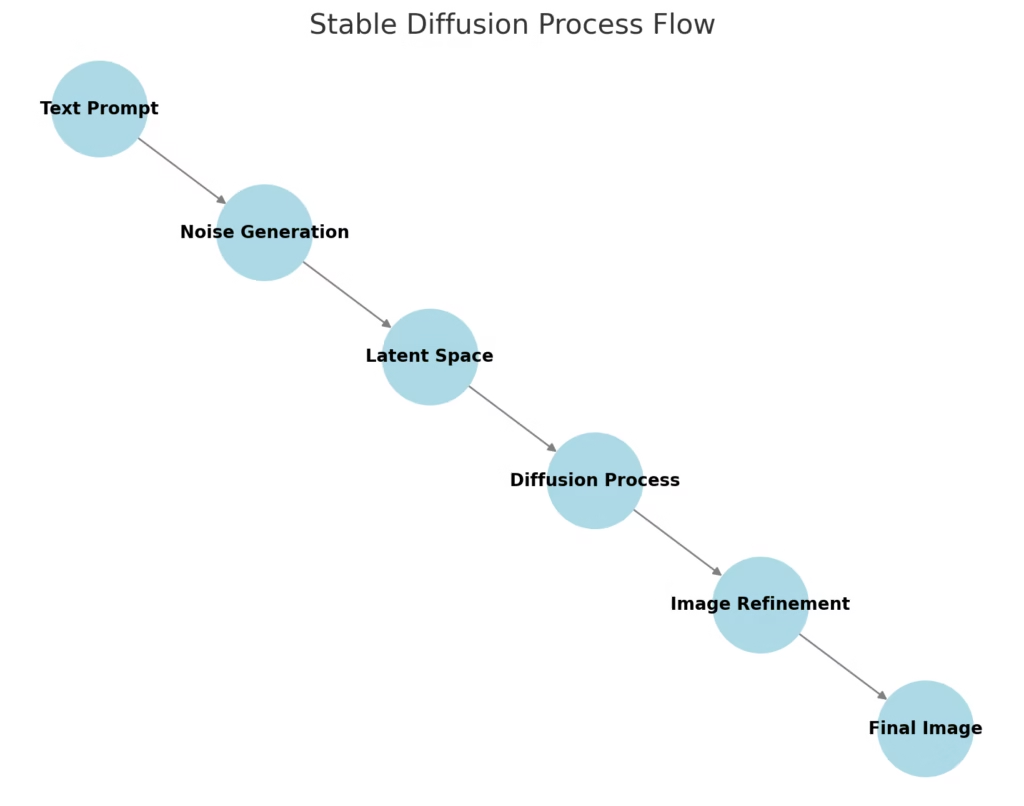

How Stable Diffusion Works

Stable Diffusion is a latent diffusion model that transforms text prompts into images. It does this by generating noise and gradually refining it into a coherent image using deep neural networks. Unlike simple GAN-based image generators, diffusion models create much more detailed and refined visuals.

Why Use Stable Diffusion Locally?

- Privacy: No cloud dependency; images stay on your device.

- Customization: You can fine-tune models or train your own.

- No API Limits: Generate as many images as your hardware allows.

- Lower Costs: No need to pay for API calls.

Setting Up Stable Diffusion on a MacBook Pro M1/M2

On MacOS, you can run Stable Diffusion using diffusers, a library from Hugging Face.

Install Dependencies

pip install diffusers transformers torch torchvision accelerateRun Stable Diffusion

from diffusers import StableDiffusionPipeline

import torch

model_id = "CompVis/stable-diffusion-v1-4"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe.to("mps") # "mps" enables Apple Metal support for fast generation

prompt = "A futuristic cyberpunk city at night with neon lights"

image = pipe(prompt).images[0]

image.show()This locally generates an image without needing an internet connection.

Expanding Applications: More Than Just Art

Stable Diffusion is not just for digital artists; it has applications in:

- Marketing & Branding: Generating product mockups and visuals.

- Game Development: Creating concept art and environments.

- Education: Helping students visualize historical events or scientific concepts.

Case Study: AI-Powered Design Studio

A graphic designer working in branding wanted to create quick visual prototypes based on client descriptions. Instead of using stock images or manually sketching, they integrated Stable Diffusion into their workflow, significantly speeding up the ideation process. Within seconds, they could visualize multiple concepts and refine them based on feedback.

2. Using ComfyUI: A No-Code Approach to Image Generation

For those who prefer a visual interface, ComfyUI provides a node-based workflow for image generation. It’s an excellent tool for users who want powerful AI image generation without writing code.

Installing ComfyUI

git clone https://github.com/comfyanonymous/ComfyUI.git

cd ComfyUI

pip install -r requirements.txt

python main.pyOnce installed, you can generate images by connecting nodes that define prompts, models, and diffusion parameters.

Case Study: Indie Game Development

A solo game developer wanted to create concept art for their game but lacked the budget for a full-time artist. They used ComfyUI to generate characters, backgrounds, and UI elements. By tweaking parameters and experimenting with different models, they built a consistent art style without outsourcing.

Choosing the Right Image Generation Approach

| Approach | Best For | Pros | Cons |

|---|---|---|---|

| Stable Diffusion (Local) | Artists, designers, developers | No API costs, full control | Requires a powerful GPU |

| ComfyUI | Beginners, UI-based users | No coding needed | Learning curve for node-based workflow |

| DALL·E API | Bloggers, SaaS, real-time apps | No local GPU needed, high-quality images | API costs, limited customization |

| LLaVA (Ollama-based) | Vision tasks, image captioning | Works with Ollama | Not for generating images |

Final Thoughts

While Ollama itself doesn’t generate images, it can be seamlessly integrated with image-generation tools like Stable Diffusion, ComfyUI, and cloud-based APIs. The choice depends on whether you need local generation, UI-based workflows, or API-based solutions.

For those who prefer a self-hosted AI setup, combining Ollama for text and Stable Diffusion for images creates a powerful, privacy-friendly AI assistant. Additionally, newer multimodal models may soon offer better integration between text and image AI, opening up even more creative possibilities. By experimenting with these tools, you can find the perfect balance between text and visual AI for your specific needs.